From Science Fiction to Reality: The Journey of AI Development

Introduction

The realm of artificial intelligence (AI) has transitioned from the pen of science fiction authors into a fundamental part of contemporary life. The intriguing notion that machines could possess human-like cognitive abilities has fueled both scientific inquiry and popular imagination. From the early depictions in literature to the groundbreaking algorithms of today, AI has evolved into one of the most transformative technologies of our era. This article traces the journey of AI development from its fictional roots to its current realities, emphasizing the breakthrough moments, key influences, and future implications.

Early Concepts in Literature

The seeds of artificial intelligence were planted in the fertile ground of science fiction. Authors like Mary Shelley, with her novel “Frankenstein,” explored themes of creation and consciousness in the early 19th century. Shelley’s creature, though not a product of technological advancement, raised questions about the moral and ethical implications of creating life. Her work set the stage for later explorations into what it means to be sentient.

In the 20th century, the landscape shifted as writers like Isaac Asimov and Philip K. Dick tackled the subject more directly. Asimov introduced the “Three Laws of Robotics” in his stories, which outlined ethical guidelines for robotic behavior and foreshadowed many discussions in modern AI ethics. Dick’s narratives delved into the nature of reality and identity, asking readers to consider whether artificial beings could possess consciousness or rights.

These early literary explorations not only entertained but also inspired futurists and scientists, planting the idea that machines might one day rival human intellect.

The Birth of AI as a Discipline

The formal inception of artificial intelligence as a scientific field occurred in the mid-20th century. In 1956, the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, marked the birth of AI as a research domain. The conference was pivotal, as it gathered visionary thinkers who shared the ambition of simulating human intelligence.

During this period, early AI programs such as the Logic Theorist and the General Problem Solver demonstrated that machines could solve algebra problems and play games like chess. These accomplishments ignited optimism and marked the beginning of a new era in computational sciences. However, the initial excitement was paired with significant challenges, including limitations in computing power, data scarcity, and the complexity of human cognition.

The AI Winters

Despite early successes, the AI field faced numerous setbacks, leading to two significant “AI winters” in the late 1970s and late 1980s. During these periods, funding dwindled, and public interest waned, primarily due to unmet expectations and the challenges of creating truly intelligent systems. Researchers found it difficult to scale early algorithms and struggled with problems such as natural language processing and machine learning.

The setbacks prompted a re-evaluation of AI goals and methods. Critics questioned whether machines could truly replicate human cognitive processes or if they were merely simulating intelligence. Nevertheless, the groundwork laid during these periods was vital for future advancements, as researchers regrouped and refined their approaches.

Revitalization through Machine Learning

The revival of AI in the 21st century was powered primarily by advances in machine learning, particularly deep learning—a subset of machine learning that utilizes neural networks. Fueled by increasing computational power and vast amounts of data (Big Data), researchers began to make significant strides in various AI applications.

In 2012, a breakthrough came when a team from the University of Toronto, led by Geoffrey Hinton, won the ImageNet competition using deep learning techniques. Their neural networks could effectively identify and categorize images, showcasing the potential of AI in computer vision. This moment marked a turning point, signaling the efficacy of deep learning approaches and sparking renewed interest and investment in AI research.

In parallel, natural language processing saw significant advancements, with models like Google’s BERT and OpenAI’s GPT series demonstrating the ability to understand and generate human language with remarkable accuracy. These developments have not only shaped consumer applications like chatbots and virtual assistants but have also revolutionized industries ranging from healthcare to finance.

AI in Everyday Life

As AI technologies matured, their integration into everyday life became increasingly evident. The penetration of AI systems into consumer products has been widespread, from recommendation algorithms on streaming platforms to intelligent personal assistants like Siri and Alexa. Companies like Amazon and Netflix harness AI to enhance customer experiences, making suggestions tailored to individual preferences.

Additionally, the application of AI in sectors such as healthcare has been groundbreaking. Algorithms can now analyze medical images with precision, diagnose illnesses, and even predict patient outcomes. In a world grappling with the challenges of an aging population, AI’s abilities to assist in clinical settings represent a promise for improved patient care and operational efficiency.

Nonetheless, this rapid integration raises questions about ethical considerations and social implications. Concerns around privacy, surveillance, and bias in AI algorithms are garnering attention, prompting calls for more rigorous regulations and ethical frameworks in AI development.

The Ethical Landscape of AI

The intersection of AI with ethics has emerged as a critical area of discourse. The capabilities of AI systems introduce dilemmas surrounding decision-making, accountability, and bias. As algorithms increasingly influence decisions in hiring, law enforcement, and credit scoring, the potential for discrimination and unfair treatment becomes apparent.

Organizations like the Partnership on AI and initiatives from institutions such as the UN are advocating for responsible AI development that prioritizes fairness, transparency, and inclusivity. These conversations have led to the development of ethical guidelines and frameworks aimed at mitigating bias and fostering accountability in AI systems.

Furthermore, as AI begins to interact with more sensitive areas, such as autonomous vehicles or healthcare diagnostics, the stakes for ethical decision-making become even higher. The need for a multidisciplinary approach that includes ethicists, technologists, and policymakers is essential to navigate the complex moral terrain that AI presents.

The Future of AI Development

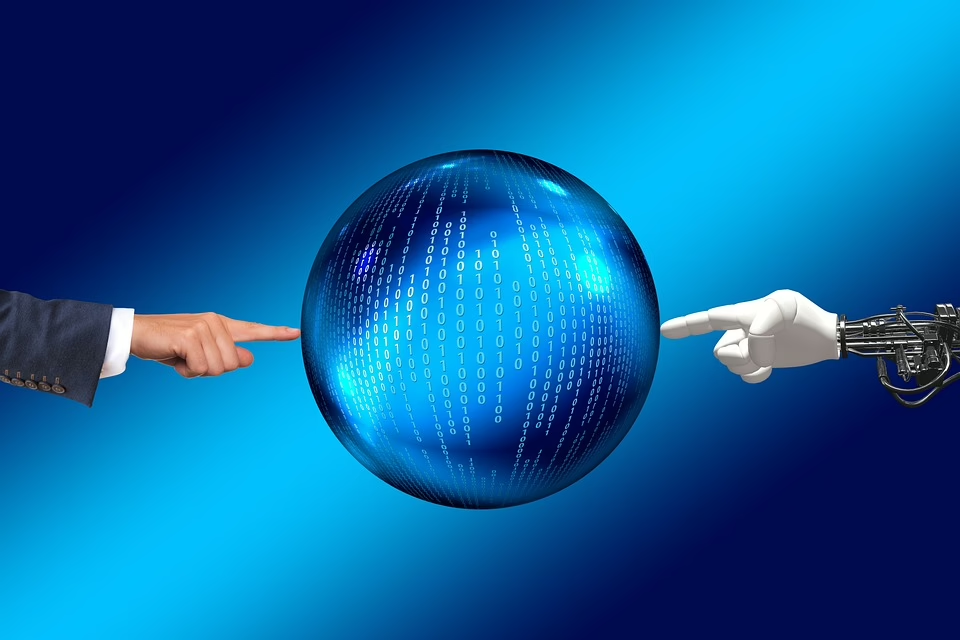

Looking ahead, the trajectory of AI development is poised for further evolution. The emergence of hybrid AI systems, which combine rule-based approaches with machine learning techniques, offers the potential to create more robust and interpretable models. Developments in explainable AI (XAI) are aimed at making AI decisions more transparent, thereby enhancing trust and acceptance.

Moreover, the focus on human-AI collaboration is garnering interest. As machines take over routine tasks, humans can focus on more creative, strategic, and interpersonal responsibilities. This synergy could redefine the future of work, raising questions about job displacement and skills development. Preparing the workforce for this shift is crucial to ensuring that the transition to an AI-driven economy benefits everyone.

The global landscape of AI is also shifting, with countries vying for leadership in AI technology. Governments are increasingly recognizing AI’s potential to drive economic growth and innovation. Initiatives promoting research, development, and international collaboration are becoming more prevalent as nations aim to harness the power of AI for societal benefit.

Conclusion

The journey of AI development mirrors a fascinating evolution from fictional ideas to a practical reality that fundamentally shapes our lives today. As we stand on the cusp of further advancements, the lessons learned from past challenges and successes will be invaluable in navigating the complexities of AI’s future.

The dual responsibility of harnessing AI’s potential benefits while addressing ethical and societal implications requires collaborative efforts from technologists, ethicists, and policymakers. With ongoing innovation and a commitment to responsible development, the impact of AI on society can be transformative, paving the way for a future where human and artificial intelligences coexist harmoniously.

References

- Asimov, I. (1950). “I, Robot.” New York: Gnome Press.

- Dick, P. K. (1968). “Do Androids Dream of Electric Sheep?” New York: Doubleday.

- McCarthy, J., Minsky, M., Rochester, N., & Shannon, C. (1956). “A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence.”

- Hinton, G., Alex Krizhevsky, & Ilya Sutskever. (2012). “ImageNet Classification with Deep Convolutional Neural Networks.” Advances in Neural Information Processing Systems.

- Russell, S., & Norvig, P. (2020). “Artificial Intelligence: A Modern Approach.” Pearson.

- Binns, R. (2018). “Fairness in Machine Learning: Lessons from Political Philosophy.” Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency.

- Partnership on AI. (“Ethical AI”). Retrieved from partnershiponai.org.

- UN. (2021). “The Age of Digital Interdependence: Report of the UN Secretary-General’s High-Level Panel on Digital Cooperation.”

Add Comment