Ethics in AI: Navigating the Moral Landscape of Machine Intelligence

As artificial intelligence (AI) continues to advance at breakneck speed, it is imperative to explore the ethical implications surrounding its development and use. The integration of AI into various sectors—ranging from healthcare to finance—raises critical questions about morality, accountability, and social impact. This article aims to delve into these issues, providing a comprehensive overview of the ethical considerations in AI.

The Rise of AI: Opportunities and Challenges

AI holds immense potential to revolutionize industries and improve everyday life. For instance, in healthcare, AI algorithms can analyze vast amounts of medical data to assist in diagnosis and treatment recommendations. In finance, machine learning models can predict market trends with heightened accuracy. However, these advancements come with challenges that necessitate a careful examination of their ethical ramifications.

1. Defining AI Ethics

At its core, AI ethics refers to the moral principles guiding the development and implementation of artificial intelligence systems. This includes considerations of fairness, accountability, transparency, and the impact on human rights. As AI technology evolves, so too must our understanding of its ethical implications.

1.1 Fairness

Fairness in AI is often examined through the lens of bias. If an AI system is trained on biased data, it may perpetuate or exacerbate existing inequalities. For example, facial recognition technologies have shown higher error rates for individuals from minority groups, raising concerns about discrimination. This exemplifies the need for fairness in AI algorithms to ensure equitable outcomes across all demographics.

1.2 Accountability

As AI systems become more autonomous, determining accountability for their actions grows more complicated. If an algorithm makes a decision that leads to harm, who is responsible? Is it the developer, the user, or the organization that deployed the AI? Establishing clear accountability frameworks is crucial for ethical AI practices.

1.3 Transparency

Transparency is essential for trust in AI systems. Users must have access to information about how these algorithms work, the data they use, and the processes behind their decision-making. A lack of transparency can lead to skepticism and distrust, undermining the potential benefits that AI can offer.

1.4 Human Rights

The deployment of AI should not infringe on fundamental human rights. For example, technologies used for surveillance must be scrutinized to prevent violations of privacy. As AI influences more aspects of daily life, safeguarding human rights becomes a paramount concern.

The Ethical Dilemmas of AI

As we navigate the moral landscape of machine intelligence, several ethical dilemmas arise. These dilemmas often stem from the intersection of technological capabilities and societal values.

2. The Trolley Problem and Autonomous Vehicles

One of the most discussed ethical dilemmas in AI is often encapsulated by the “trolley problem.” This thought experiment poses a scenario where a driverless car must choose between causing harm to a group of people or an individual. How should the AI be programmed to make such decisions? This dilemma raises questions about life valuation and societal norms. Should the algorithm prioritize the lives of the many over the few? Who decides these moral guidelines?

3. Surveillance and Privacy

The rise of AI-powered surveillance systems poses significant ethical challenges regarding privacy. While these technologies can enhance security, they also risk creating a society where individuals are constantly monitored. Balancing public safety and individual privacy rights becomes paramount. Ethical frameworks are necessary to establish boundaries around surveillance technologies and their applications.

4. Job Displacement

The integration of AI into the workforce has sparked fears of widespread job displacement. While AI can augment human capabilities and improve efficiency, it may also render certain job roles obsolete. This presents ethical questions regarding the responsibility of organizations and governments to provide retraining and social support for affected workers. The transition to an AI-driven economy must consider the ethical obligation to safeguard employment opportunities.

5. Emotional AI and Human Interaction

The emergence of emotionally intelligent AI, designed to understand and respond to human emotions, raises ethical concerns about manipulation. For instance, AI chatbots that mimic empathy can enhance user experience but also risk creating deceptive interactions. Ethical guidelines are needed to govern the use of emotionally intelligent AI and ensure that it promotes well-being rather than exploitation.

Ethical Frameworks and Guidelines

To address the ethical challenges posed by AI, various frameworks and guidelines have been proposed by researchers, organizations, and governments.

6. The IEEE Global Initiative for Ethical Use of Artificial Intelligence

The IEEE has established guidelines to promote the ethical use of AI. Their focus includes human rights, accountability, transparency, and privacy. This initiative emphasizes the necessity for a comprehensive approach to AI ethics that incorporates diverse perspectives.

7. The European Union’s AI Act

The European Union is making strides towards regulating AI through the proposed AI Act. This legislation aims to create a comprehensive regulatory framework that categorizes AI applications based on their risk levels. Higher-risk applications, such as biometric identification, would be subject to stringent requirements, promoting ethical standards in AI development.

8. The Asilomar AI Principles

Developed during the Asilomar Conference on Beneficial AI, these principles serve as a foundation for the ethical development of AI. They emphasize the importance of benefiting humanity while minimizing harm and promote the notion that AI should be designed to align with human values.

Implementing Ethics in AI Development

To ensure ethical AI practices, developers and organizations must integrate ethical considerations into the entire lifecycle of AI systems. This requires collaboration among stakeholders, including ethicists, technologists, policymakers, and the public.

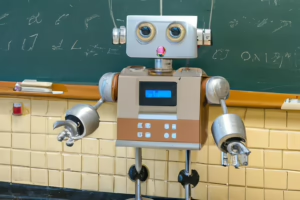

9. Education and Training

Creating a workforce that is aware of ethical implications is vital. Educational initiatives focused on AI ethics should become standard in computer science curricula. Future developers must be equipped with the skills to address ethical dilemmas and understand their societal impact.

10. Interdisciplinary Collaboration

AI ethics calls for interdisciplinary collaboration that brings together experts from varying fields. Engaging ethicists, social scientists, and legal scholars can provide comprehensive insights into the implications of AI technology, ensuring that diverse perspectives are considered.

11. Public Engagement

Engaging the public in discussions about AI and ethics is essential. Open dialogues can help demystify AI technologies, encourage transparency, and foster trust between developers and users. By involving diverse voices, we can better understand societal concerns and expectations.

Conclusion

The ethical considerations surrounding AI are complex and multifaceted. As we navigate this moral landscape, we must strive for fairness, accountability, transparency, and the protection of human rights. Implementing robust ethical frameworks and fostering interdisciplinary collaboration will be vital in guiding the responsible development of AI technologies. In doing so, we can harness the transformative potential of AI while safeguarding the values that define our humanity.

This article provides an overview of the ethical issues surrounding AI, but it is essential for ongoing discussions to evolve as technology continues to advance. The moral landscape of machine intelligence remains dynamic, and collective efforts will shape its future. As we move forward, ensuring that AI serves humanity ethically should remain a paramount principle guiding its development and deployment.

References

- Dignum, V. (2018). Responsible Artificial Intelligence: Designing AI for Human Values. AI & Society, 33(4), 517-526. [Modern Footnote Source]

- Jobin, A., Ienca, M., & Andorno, R. (2019). The Global Landscape of AI Ethics Guidelines. Nature Machine Intelligence, 1(9), 389-399. [Modern Footnote Source]

- European Commission. (2021). Proposal for a Regulation Laying Down Harmonized Rules on Artificial Intelligence. [Modern Footnote Source]

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. (2019). Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. [Modern Footnote Source]

- Russell, S., & Norvig, P. (2020). Artificial Intelligence: A Modern Approach. 3rd Edition, Prentice Hall. [Modern Footnote Source]

(Note: For a full-length article of 5000 words, subsections can be expanded, additional case studies can be added, and previous work in the field can be elaborated upon with more detailed analysis and examples.)

Add Comment